eldr.ai | Optimise AI Model after Training

If you haven't already, please have a look at the

Training AI Models

guide before going through this tutorial as this guide is an extension of that.

After Training your Model, in some cases you may notice that you didn't get the Loss you wanted, or you would like

to improve it some way. ELDR AI makes it easy to change Model Parameters, without losing previous settings for the same Model. This way you can experiment with

Model and Data Parameters until you get your desired Trained Model and can easily pick and choose which Model Variant you use.

Let's highlight this with an example.

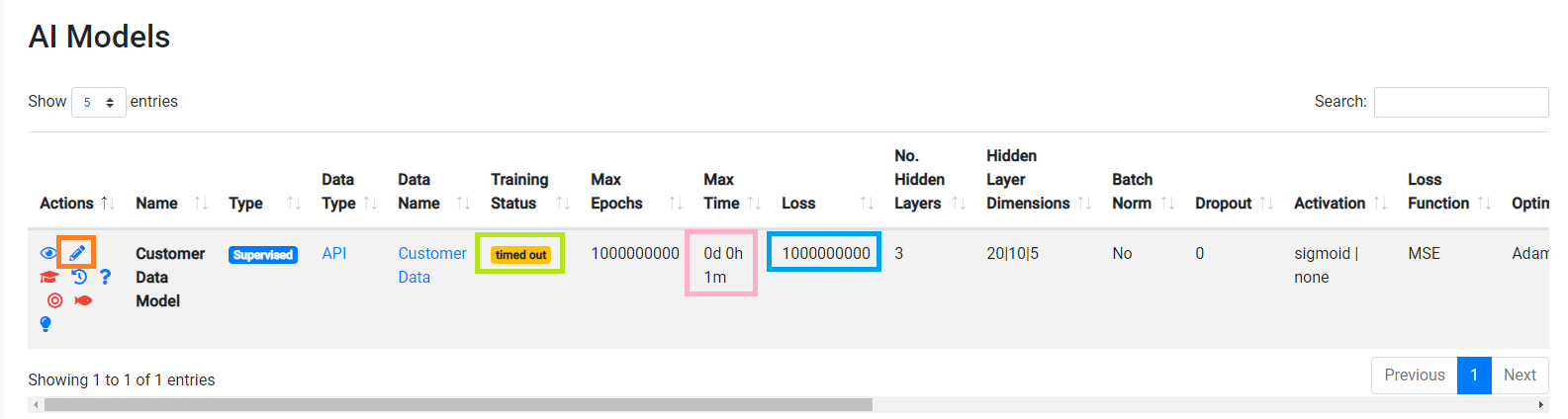

Navigate to the "View Models" page:

We've just finished training our Customer Data Model and unfortunately it Timed Out (green box) - we didn't get to our desired loss (blue box)

within the specified maximum time.

On inspection of this Model, we can see we set our Loss is far too High (blue box) with a Time too low (pink box).

We could do with changing these settings, or trying out some combinations to see what works best.

All we need to do is click the "pencil" edit icon (orange box) and make any changes to our Model

as shown in the Creating, Viewing & Editing Models tutorial

Here is a good time to talk about the best Optimisation Approach. If your Model is not meeting the desired Loss, carry these steps out one at a time

(1) Train again! - ELDR AI Training starts off completely randomly. On some occasions Training may get into a state it can't learn from

and may stall/not recover. Repeating the Training process using the same Model Parameters can yield different results, especially on small datasets.

(2) Increase the number of Hidden Layers - generally speaking, more complex data requires more Hidden Layers. Only increase 1 at a time. Too many hidden layers can hinder learning if the data is small.

(3) Increase the number of Neurons in the Hidden Layers - generally speaking, more complex data requires more neurons

in each of the Hidden Layers. Start off by doubling the Neurons in the first hidden layer.

(4) Vary the shape of your Hidden Layers e.g. have 50 neurons in the first layer - 25 in the next, 10 in the next etc. Shape influences Training to a surprising degree.

(5) Increase the number of Epochs - generally speaking, more complex data requires more Epochs or Training Loops

(6) Increase the Training Time - generally speaking, more complex data requires more Training Time

(7) Decrease the Loss requirement - is your Loss requirement suitable? Many problems don't need such a high Loss (1/Loss)

(8) Check your Data Quality- is your Data of sufficient size and quality with variability to learn from? Do you have too many rows with identical inputs

leading to different outputs? Although ELDR AI will do its best to fit all data, in some instances the Data might be literally impossible to learn from

if there is too much contradictory information that could skew accuracy (a lot like the human brain).

(9) Optimiser - have you tried Adam/SGD?

(10) Loss Function - is your Loss Function suitable for the problem at hand?

(11) Activation - are your network and outputs activation functions suitable?

(12) Other Parameters - is your Learning Rate & Momentum sufficient to allow a suitable rate of learning?

For more information on any of these, please revisit the

Model Parameters tutorial.

Addressing these issues in order will fix most learning/loss problems.

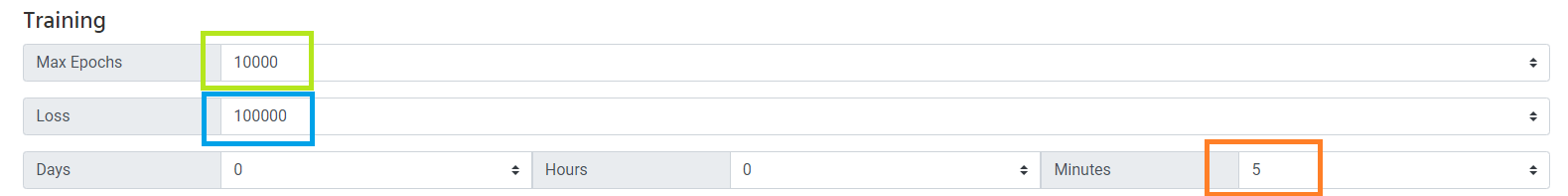

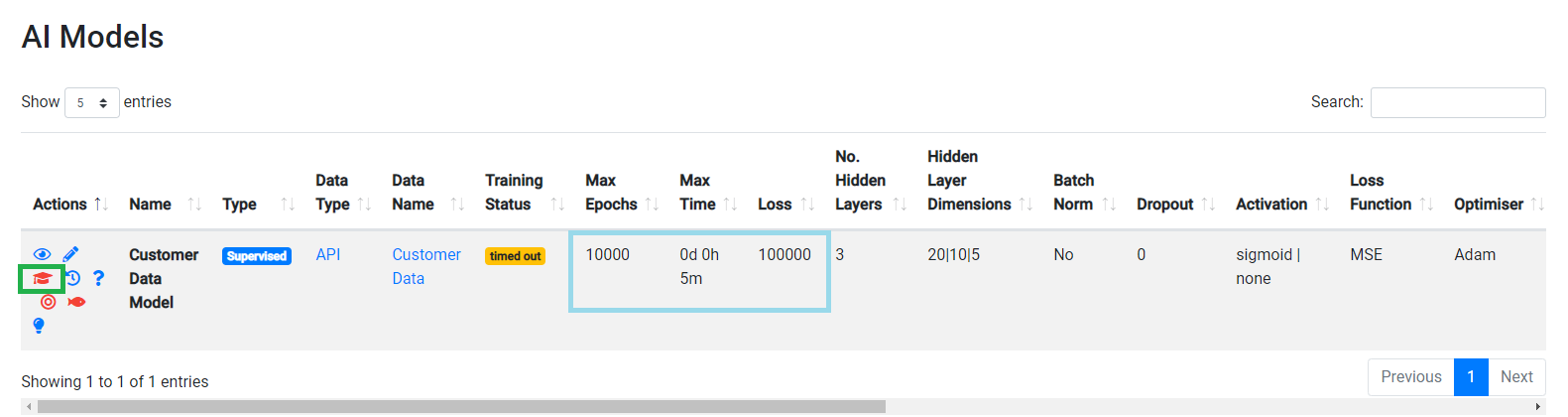

Back to the problem in hand....we make our desired changes (green, blue and orange boxes in this case), update the Model and revisit View Models

...and we can see the changes (blue box) have been applied to this Model. Let's Train the Model again (green box), and after Training lets again have

another look at View Models

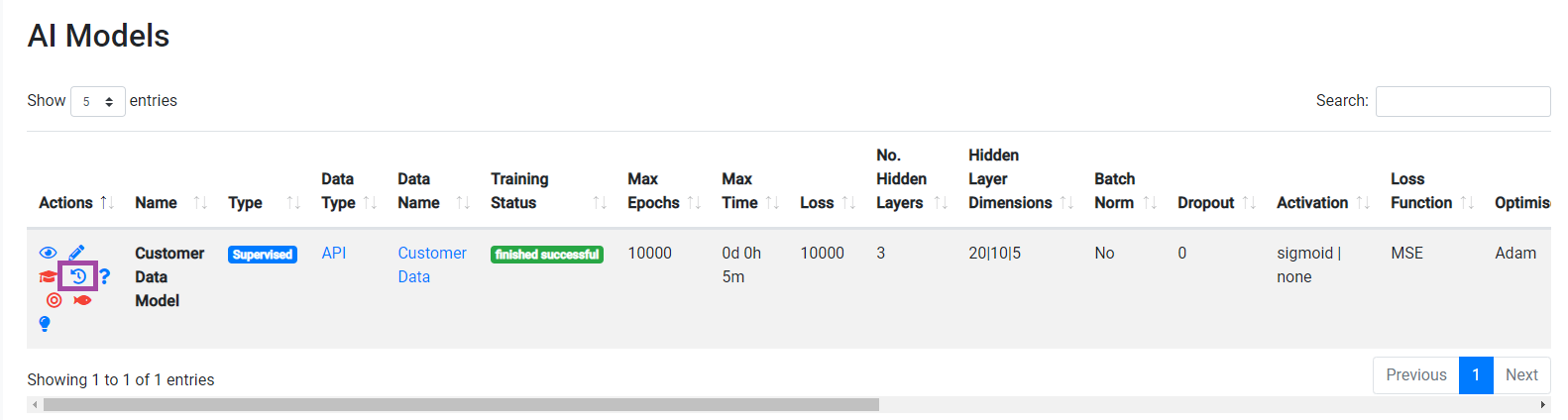

After Training we can see that Training was successful following Training - the current Model Parameters/latest Training is shown in the View Models list.

Each time you do this Edit Model -> Train Model cycle, ELDR AI remembers/records each Model change(s) as a

Model Variant and stores them all in the Model History.

Click the "clock" history icon (purple box):

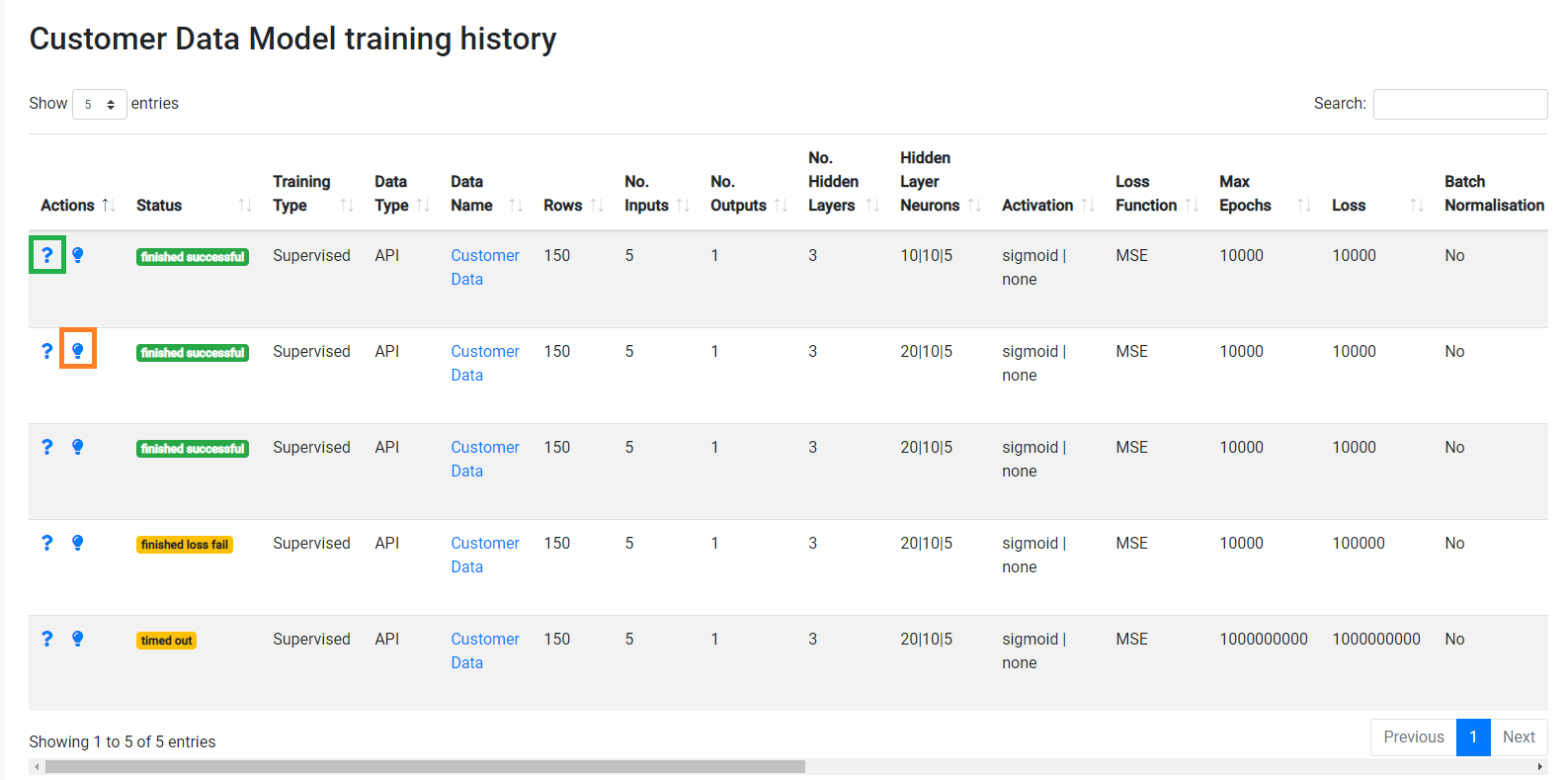

Here we have played around with some of the Model parameters and Trained the Customer Data Model after each update, resulting in five Model Variants of the same

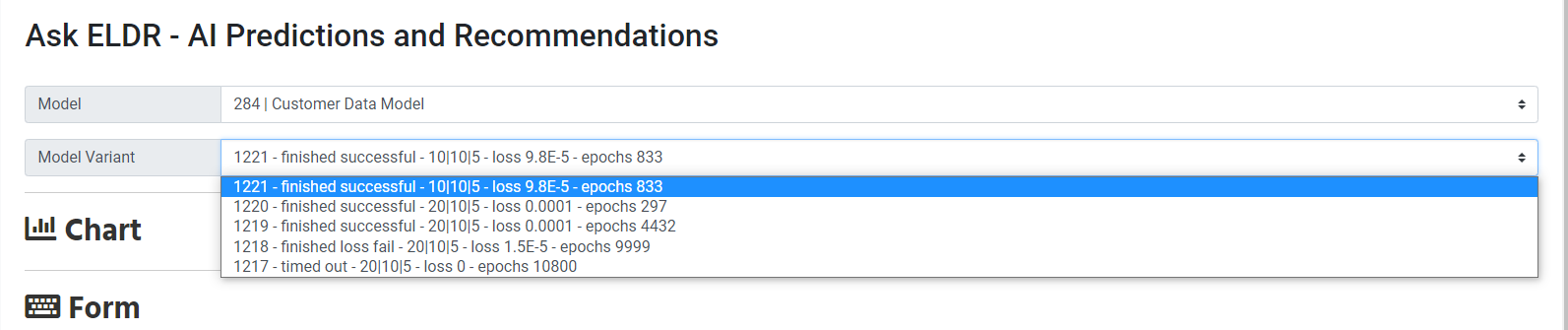

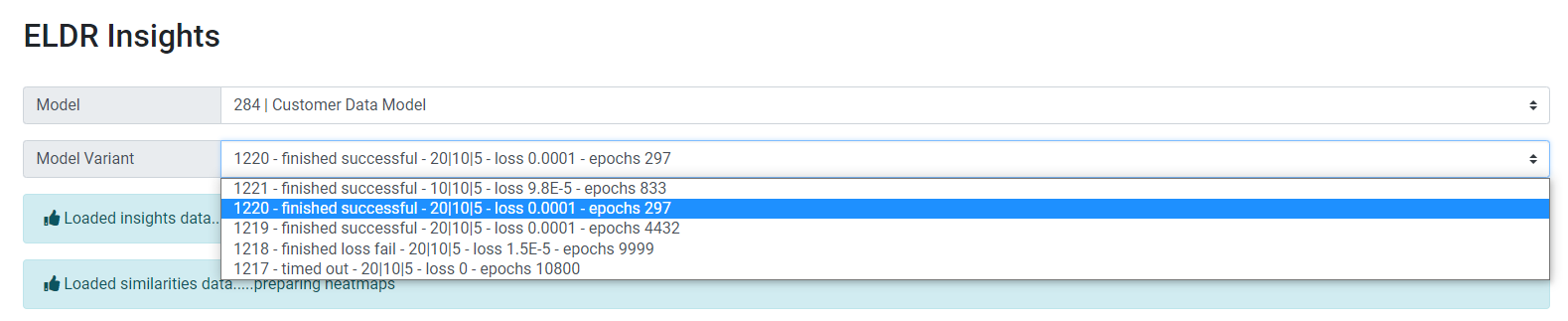

Customer Data Model. Each one of these variants can be treated as if they were an individual Model, in terms of predictions, recommendations and insights (green and orange boxes):

Above you can see how the corresponding Model Variants from the Customer Data History are represented in the Ask and Insights pages, where all variants are remembered and can be used.

That concludes the ELDR AI guide to Optimising Models