eldr.ai | ELDR AI Model Structure

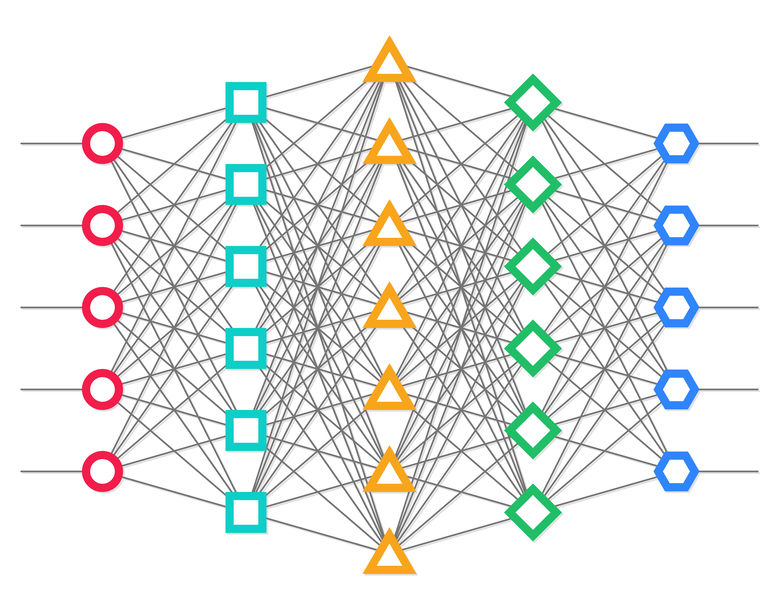

ELDR AI Models/Artificial Neural Networks are arranged in three main layers -

Input x 1, Hidden x h and Output x 1, along with some supportive layers: Activation,

Embedding, Batch Normalisation (optional), Dropout (optional) and Loss Adjustment.

Above is a simplified block diagram of the basic structure of all ELDR AI Models.

Without getting into the maths, let's talk about what each layer is doing:

(1) data - this is simply our input data e.g. CSV, API or TEXT.

(2) ip/ipc/s - our data from above is split into values(ip), categories(ipc) and sentences(s) - as determined by row 2 in your data

(3) embedding - for categorical AI data - ipc - (non-scale data) which contains e.g. single words, product names, colours, product IDs, users IDs etc

ELDR AI treats them as single entities but it also needs to convert them to a format that can be used. Obviously you can't do maths on words as they are.

Embedding is a process that converts categories to numbers (it encodes them).

If you want to pass multiple words, sentences, paragraphs etc though ELDR AI, you denote it wit "s" instead of "ipc". ELDR AI will automatically encode each word within your sentence for use downstream.

(4) input layer - this is the start of the Model proper. Values and Embeddings are fed raw into the Model.

(5) batch normalisation - batch normalisation is a way of keeping values in check as they go through the network during the

learning process. Although not necessary, it has been shown to speed up learning by stopping value ranges getting too broad, meaning the network doesn't

have to work as hard to learn. By default it is turned off in ELDR AI - feel free to experiment with it.

(6) dropout - randomly turns off neurons during the learning process determined by a defined probability. It has been shown in some circumstances,

especially with a large number of neurons to improve the accuracy of learning by literally making the network work harder to adapt to the sudden

missing neuron(s) - its exact mechanism is still not fully understood. By default it is set to 0 in ELDR AI - feel free to experiment with it.

(7) hidden layers - the hidden layer(s) - of which there can be as many as you like denoted by h - are where all the

learning happens. If you have more 1 hidden layer it is called Deep Learning. In these layers, each neuron in every layer

influences every other neuron in the next layers - during learning the level of influence is constantly changing until the data fits, at which point

(during prediction) the level of influence remains constant.

(8) hidden layer activation - in step (7) we mentioned "influence". Influence from one neuron to the next is governed by the activation status.

Activation is a way of deciding the output of one neuron based on the inputs it receives from other neurons. It is therefore a type of threshold and is

key to AI learning in Artificial Neural Networks.

(9) output layer - this is the final main layer of the Model.

(10) output layer activation - this is an optional layer. It allows an activation to be applied to the actual output of the Model.

In most cases you will never use this, but there are some scenarios where it can be useful. We will discuss this later.

(11) loss adjustment - this layer is only applicable during learning. Model learning occurs by adjusting the influence of neurons

on other neurons in the network. When data is fed forward through the network, the output of the network is compared with the output we provided ELDR AI

in our data output column (op). This comparison is calculated and the result is the "loss". The aim of Model learning is to reduce this loss as much as

possible e.g make the model output match the output we told it. The loss adjustment process firstly calculates the loss, and then feeds this information

back to all the neurons in the network updating their influencing power on the rest of the neurons - each time it does this the loss is reduced.

(12) output - the output proper.

The above image shows how neurons from one layer influence the neurons in other layers (red circle is the input layer, blue hexagon is the output layer

and all other shapes are hidden layers).

That concludes the ELDR AI guide to AI Model structure.